When I first heard the term "prompt engineering" I laughed. Surely we didn't need specialist skills to play with ChatGPT? Likewise with "LLMOps", which I cynically thought was the latest thing that Gartner had invented to sell to unsuspecting businesses. They sound like meaningless buzzwords.

I was wrong though. Having gotten closer to the realities of deploying LLMs in real-world settings, I am now convinced that they are both going to be major new skillsets, job roles and capabilities. In some ways, they are going to be the Software Engineers and DevOps Engineers of AI.

Prompt Engineering

Prompt Engineering should be thought of as the "programming language" that we use to control large language models.

When we interact with an LLM in the ChatGPT GUI, prompt engineering allows us to get the right result in the right format with fewer interactions. This alone is a useful for skill for knowledge workers.

But prompt engineering really comes into play when we design and deploy an LLM - not just use it - for instance to answer customer enquiries, or develop an intelligent agent which will carry out tasks for us.

When we do this, we use prompt engineering to tell these LLMs how to interact with users and systems, how to put guardrails around their content, how to prevent users from doing anything malicious with them, and how to process inputs and format outputs.

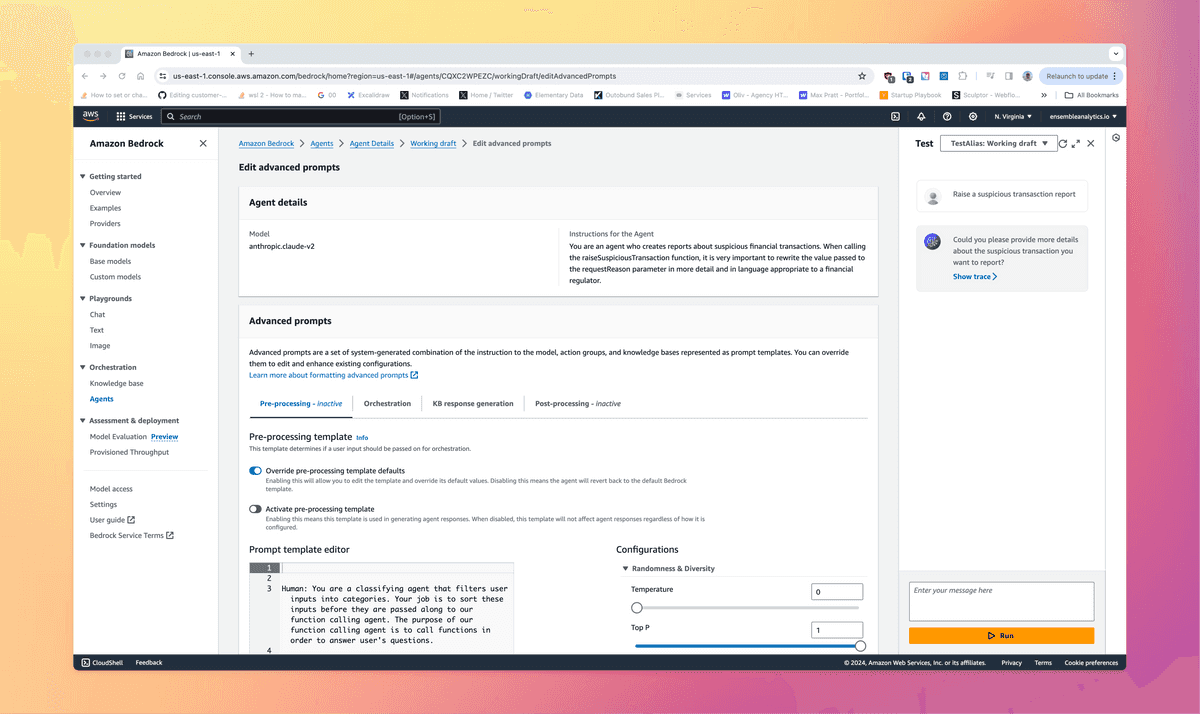

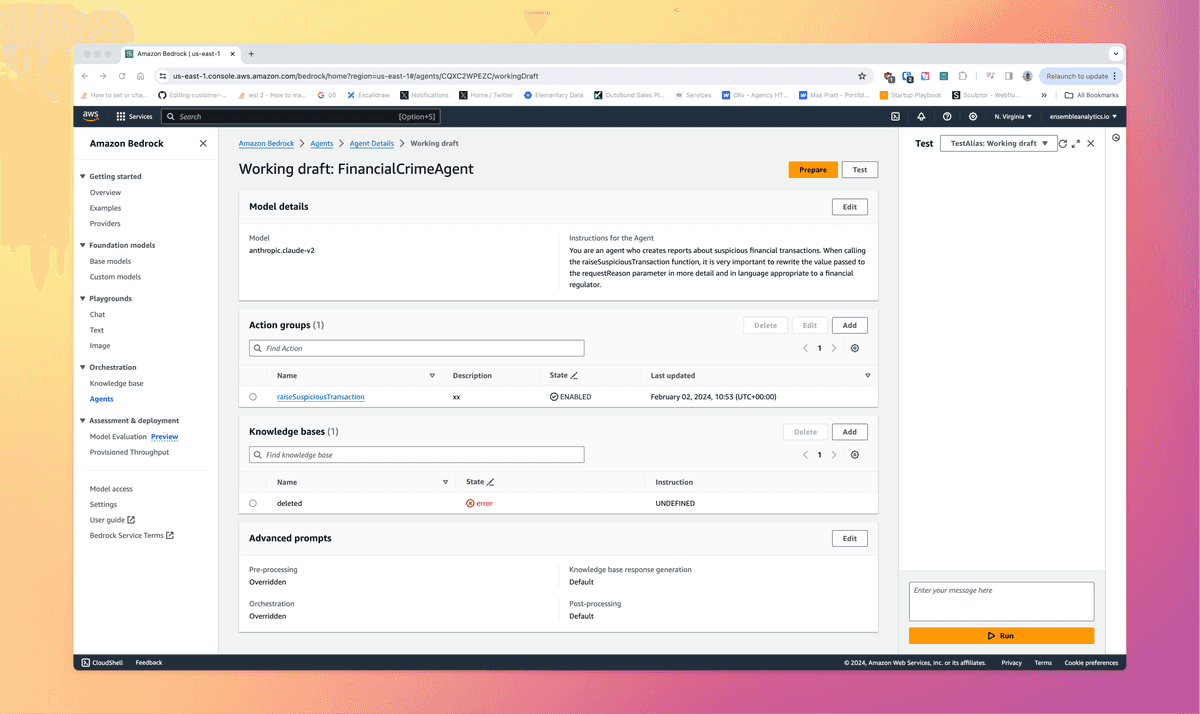

As an example, the screenshot below is from theh AWS Bedrock LLM Service which uses plain English prompt engineering in 3 ways - to filter out malicious requests, for orchestrating tasks using supplied AWS Lambda functions, and for post-processing during synthesis of the response.

When deploying agents to carry out tasks for us, we also wrap them in prompts which explain how they should behave, how they should reason through problems, and how they use APIs to accomplish their task. Prompt Engineering becomes more important in this situation as we need to keep our agents from going rogue when they can influence the outside world.

Prompt engineeirng also has a big security and compliance angle to it, protecting the LLM from malicious actors, security risks and malicious actors. Businesses simply won't be putting LLMs into production en masse without prompt engineering expertise to defend from these risks.

All of this work is closer to art than science and is an empirical exercise consisting of test and learn cycles. It's also a constantly moving target as the foundation models evolve, and there will be different approaches across the different models.

All in all, as LLMs become embedded in business processes there is almost certainly enough here to become a full time role and specialism.

LLMOps

Imagine the situation in a few years when a business have a number of LLMs in production that are interacting with employees and customers, and agents that are handling business processes for us.

These LLMs will be trained and customised to their specific business and data and will be developed by internal teams of "prompt engineers".

Business will then face a DevOps-like task of continuously changing and deploying new versions of their models into production.

Firstly, the prompt engineers will need to build and iterate on LLM based apps on their local desktop. They will need to be able to run them, develop them, test them etc with a tight feedback loop. This will lead to new requirements on the developers desktop.

Next, the configured models will need to move from development, to test to production as new iterations are released. This means they will need to be source code controlled, versioned, archived etc.

Testing will need to include manual and automatic evaluations to measure their performance and ensure that the prompt engineers aren't introducing bugs or security regressions. Because LLMs are non deterministic and complex, we will need totally new techniques to test them which could themselves incorporate AI.

In production, we will need to work out how to scale LLMs up and down, do blue/green deployments and roll back bad releases back without breaking the world in the same way that we had to do microservices.

Maybe we will need to learn how to run LLMs in Docker and Kubernetes like platforms as businesses go down the route of hosting their own LLM infrastructure on premises or in their cloud accounts.

Production monitoring and observability of the LLMs will also be more important than application monitoring ever was due to the greater potential for bad things happening.

This all combines into an "LLMOps" task, and I believe it is deep enough and different enough to DevOps engineering to justify being a full time specialism.

New Job Roles

This is quite exciting development as we will now have a new field of "prompt engineering" building out the LLMs using plain English prompts, and a new field of "LLMOps" for getting them into production through reliable pipelines.

The job roles to do this are going to be highly skilled, and also highly leveraged as someone who can help improve the performance or cycle time of a model by a single percent could generate huge cost savings for their business.

I think that businesses that jump on these trends first and begin to put these capabilities into place will be at a massive advantage. They will be able to build new agents and automations that safely deliver value, and iterate on them quickly and reliably with thorough testing and monitoring. Those that move slowly will miss out on the niche pool of talent who can build and automate systems like this and be left behind.

LLMs In The Real World

We have put together the following video which hopefully brings to life how LLMs can be used in a real world business context. These are the types of systems that will ultimately be powered by LLMs which are controlled through prompts and deployed through LLMs.

If this resonates, and if you are looking to develop your AI strategy and understand how prompt engineering, please reach out to us for an informal discussion.